Implementing Face Detector on USB Camera Connected to Raspberry Pi using Kinesis Video Streams and Rekognition Video

Using Kinesis Video Streams and Rekognition Video, I implemented a face detector on a USB camera connected to Raspberry Pi. Let me show you how to implement.

Overview

Prerequisites

Video Producer

In this post, the following is used as a video producer:

- Raspberry Pi 4B with 4GB RAM

- Ubuntu 23.10 (installed using Raspberry Pi Imager)

- USB Camera

- GStreamer

AWS Resources

This post uses the following:

- AWS SAM CLI

- Python 3.11

Creating SAM Application

You can pull an example code used in this post from my GitHub repository.

Directory Structure

/

|-- src/

| |-- app.py

| `-- requirements.txt

|-- samconfig.toml

`-- template.yaml

AWS SAM Template

AWSTemplateFormatVersion: 2010-09-09

Transform: AWS::Serverless-2016-10-31

Description: face-detector-using-kinesis-video-streams

Resources:

Function:

Type: AWS::Serverless::Function

Properties:

FunctionName: face-detector-function

CodeUri: src/

Handler: app.lambda_handler

Runtime: python3.11

Architectures:

- arm64

Timeout: 3

MemorySize: 128

Role: !GetAtt FunctionIAMRole.Arn

Events:

KinesisEvent:

Type: Kinesis

Properties:

Stream: !GetAtt KinesisStream.Arn

MaximumBatchingWindowInSeconds: 10

MaximumRetryAttempts: 3

StartingPosition: LATEST

FunctionIAMRole:

Type: AWS::IAM::Role

Properties:

RoleName: face-detector-function-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

- arn:aws:iam::aws:policy/service-role/AWSLambdaKinesisExecutionRole

Policies:

- PolicyName: policy

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- kinesisvideo:GetHLSStreamingSessionURL

- kinesisvideo:GetDataEndpoint

Resource: !GetAtt KinesisVideoStream.Arn

KinesisVideoStream:

Type: AWS::KinesisVideo::Stream

Properties:

Name: face-detector-kinesis-video-stream

DataRetentionInHours: 24

RekognitionCollection:

Type: AWS::Rekognition::Collection

Properties:

CollectionId: FaceCollection

RekognitionStreamProcessor:

Type: AWS::Rekognition::StreamProcessor

Properties:

Name: face-detector-rekognition-stream-processor

KinesisVideoStream:

Arn: !GetAtt KinesisVideoStream.Arn

KinesisDataStream:

Arn: !GetAtt KinesisStream.Arn

RoleArn: !GetAtt RekognitionStreamProcessorIAMRole.Arn

FaceSearchSettings:

CollectionId: !Ref RekognitionCollection

FaceMatchThreshold: 80

DataSharingPreference:

OptIn: false

KinesisStream:

Type: AWS::Kinesis::Stream

Properties:

Name: face-detector-kinesis-stream

StreamModeDetails:

StreamMode: ON_DEMAND

RekognitionStreamProcessorIAMRole:

Type: AWS::IAM::Role

Properties:

RoleName: face-detector-rekognition-stream-processor-role

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Principal:

Service: rekognition.amazonaws.com

Action: sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AmazonRekognitionServiceRole

Policies:

- PolicyName: policy

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- kinesis:PutRecord

- kinesis:PutRecords

Resource:

- !GetAtt KinesisStream.Arn

Python Script

requirements.txt

Leave it empty.

app.py

The Rekognition Video stream processor streams detected faces data to the Kinesis Data Stream, and it can be obtained as Base64 string (line 18). For information about the data structure, please refer to the official documentation.

The Lambda function generates an HLS url using KinesisVideoArchivedMedia#get_hls_streaming_session_url API (line 52-64).

import base64

import json

import logging

from datetime import datetime, timedelta, timezone

from functools import cache

import boto3

JST = timezone(timedelta(hours=9))

kvs_client = boto3.client('kinesisvideo')

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

def lambda_handler(event: dict, context: dict) -> dict:

for record in event['Records']:

base64_data = record['kinesis']['data']

stream_processor_event = json.loads(base64.b64decode(base64_data).decode())

# For more information the result, please refer to https://docs.aws.amazon.com/rekognition/latest/dg/streaming-video-kinesis-output.html

if not stream_processor_event['FaceSearchResponse']:

continue

logger.info(stream_processor_event)

url = get_hls_streaming_session_url(stream_processor_event)

logger.info(url)

return {

'statusCode': 200,

}

@cache

def get_kvs_am_client(api_name: str, stream_arn: str):

# See https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/kinesisvideo/client/get_data_endpoint.html

endpoint = kvs_client.get_data_endpoint(

APIName=api_name.upper(),

StreamARN=stream_arn

)['DataEndpoint']

return boto3.client('kinesis-video-archived-media', endpoint_url=endpoint)

def get_hls_streaming_session_url(stream_processor_event: dict) -> str:

# See https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/kinesis-video-archived-media/client/get_hls_streaming_session_url.html

kinesis_video = stream_processor_event['InputInformation']['KinesisVideo']

stream_arn = kinesis_video['StreamArn']

kvs_am_client = get_kvs_am_client('get_hls_streaming_session_url', stream_arn)

start_timestamp = datetime.fromtimestamp(kinesis_video['ServerTimestamp'], JST)

end_timestamp = datetime.fromtimestamp(kinesis_video['ServerTimestamp'], JST) + timedelta(minutes=1)

return kvs_am_client.get_hls_streaming_session_url(

StreamARN=stream_arn,

PlaybackMode='ON_DEMAND',

HLSFragmentSelector={

'FragmentSelectorType': 'SERVER_TIMESTAMP',

'TimestampRange': {

'StartTimestamp': start_timestamp,

'EndTimestamp': end_timestamp,

},

},

ContainerFormat='FRAGMENTED_MP4',

Expires=300,

)['HLSStreamingSessionURL']

Build and Deploy

Build and deploy with the following command.

sam build

sam deploy

Indexing Faces

Index faces which you want to detect by the USB camera. Before running the following command, replace placeholders <YOUR_BUCKET>, <YOUR_OBJECT> and <PERSON_ID> with your actual values.

aws rekognition index-faces \

--image '{"S3Object": {"Bucket": "<YOUR_BUCKET>", "Name": "<YOUR_OBJECT>"}}' \

--collection-id FaceCollection \

--external-image-id <PERSON_ID>

Rekognition does not store actual images in collections.

For each face detected, Amazon Rekognition extracts facial features and stores the feature information in a database. In addition, the command stores metadata for each face that’s detected in the specified face collection. Amazon Rekognition doesn’t store the actual image bytes.

Setting up Video Producer

In this post, a Raspberry Pi 4B with 4GB RAM, Ubuntu 23.10 installed, is used as a video producer.

Build the AWS-provided Amazon Kinesis Video Streams CPP Producer, GStreamer Plugin and JNI.

Although AWS provides Docker images containing the GStreamer plugin, these did not work on my Raspberry Pi.

Building GStreamer Plugin

Run the following command to build the plugin. According to your machine specs, it may take about 20 minutes or more.

sudo apt update

sudo apt upgrade

sudo apt install \

make \

cmake \

build-essential \

m4 \

autoconf \

default-jdk

sudo apt install \

libssl-dev \

libcurl4-openssl-dev \

liblog4cplus-dev \

libgstreamer1.0-dev \

libgstreamer-plugins-base1.0-dev \

gstreamer1.0-plugins-base-apps \

gstreamer1.0-plugins-bad \

gstreamer1.0-plugins-good \

gstreamer1.0-plugins-ugly \

gstreamer1.0-tools

git clone https://github.com/awslabs/amazon-kinesis-video-streams-producer-sdk-cpp.git

mkdir -p amazon-kinesis-video-streams-producer-sdk-cpp/build

cd amazon-kinesis-video-streams-producer-sdk-cpp/build

sudo cmake .. -DBUILD_GSTREAMER_PLUGIN=ON -DBUILD_JNI=TRUE

sudo make

Check the building result with the following command.

cd ~/amazon-kinesis-video-streams-producer-sdk-cpp

export GST_PLUGIN_PATH=`pwd`/build

export LD_LIBRARY_PATH=`pwd`/open-source/local/lib

gst-inspect-1.0 kvssink

The below should be displayed.

Factory Details:

Rank primary + 10 (266)

Long-name KVS Sink

Klass Sink/Video/Network

Description GStreamer AWS KVS plugin

Author AWS KVS <[email protected]>

...

For next startup, it is useful to add export <XXX_PATH> to your ~/.profile.

echo "" >> ~/.profile

echo "# GStreamer" >> ~/.profile

echo "export GST_PLUGIN_PATH=$GST_PLUGIN_PATH" >> ~/.profile

echo "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH" >> ~/.profile

Running GStreamer

Connect the USB camera to your device, and run the following command.

gst-launch-1.0 -v v4l2src device=/dev/video0 \

! videoconvert \

! video/x-raw,format=I420,width=320,height=240,framerate=5/1 \

! x264enc bframes=0 key-int-max=45 bitrate=500 tune=zerolatency \

! video/x-h264,stream-format=avc,alignment=au \

! kvssink stream-name=<KINESIS_VIDEO_STREAM_NAME> storage-size=128 access-key="<YOUR_ACCESS_KEY>" secret-key="<YOUR_SECRET_KEY>" aws-region="<YOUR_AWS_REGION>"

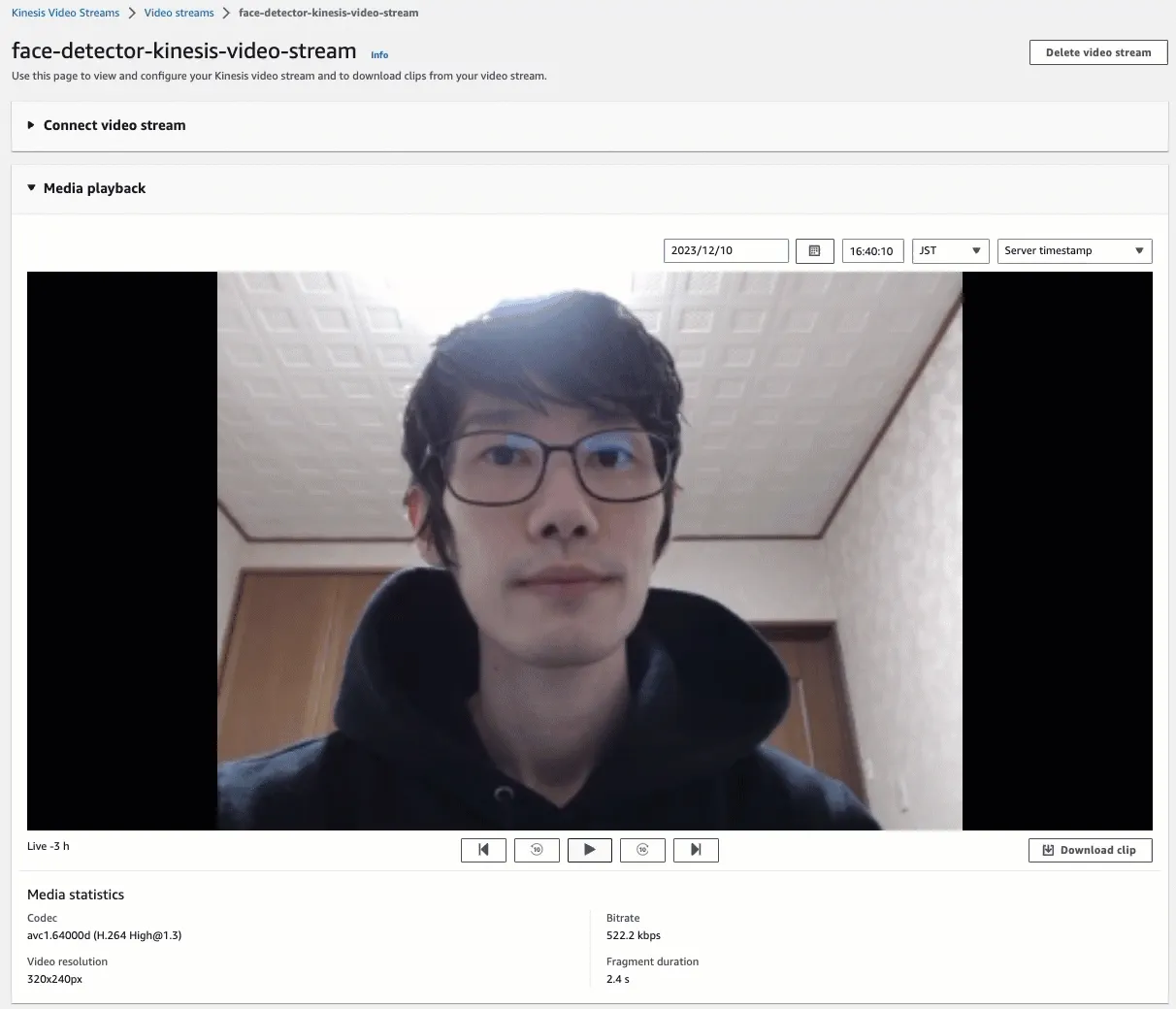

You can check the live streaming video from the camera in the Kinesis Video Streams management console.

Testing

Starting Rekognition Video stream processor

Start the Rekognition Video stream processor. This will subscribe the Kinesis Video Stream, detect faces using face collection, and stream the result to the Kinesis Data Stream.

aws rekognition start-stream-processor --name face-detector-rekognition-stream-processor

Check that the stream processor status is "Status": "RUNNING".

aws rekognition describe-stream-processor --name face-detector-rekognition-stream-processor | grep "Status"

Capturing Faces

After capturing faces by the USB camera, the video data will be processed by the following order:

- The video data will be streamed to the Kinesis Video Stream.

- The streamed data will be processed by the Rekognition Video stream processor.

- The stream processor results will be streamed to the Kinesis Data Stream.

- HLS urls will be generated by the Lambda function.

Check the Lambda function log records by the following command.

sam logs -n Function --stack-name face-detector-using-kinesis-video-streams --tail

The log records include the stream processor event data like the following.

{

"InputInformation": {

"KinesisVideo": {

"StreamArn": "arn:aws:kinesisvideo:<AWS_REGION>:<AWS_ACCOUNT_ID>:stream/face-detector-kinesis-video-stream/xxxxxxxxxxxxx",

"FragmentNumber": "91343852333181501717324262640137742175000164731",

"ServerTimestamp": 1702208586.022,

"ProducerTimestamp": 1702208585.699,

"FrameOffsetInSeconds": 0.0,

}

},

"StreamProcessorInformation": {"Status": "RUNNING"},

"FaceSearchResponse": [

{

"DetectedFace": {

"BoundingBox": {

"Height": 0.4744676,

"Width": 0.29107505,

"Left": 0.33036956,

"Top": 0.19599175,

},

"Confidence": 99.99677,

"Landmarks": [

{"X": 0.41322955, "Y": 0.33761832, "Type": "eyeLeft"},

{"X": 0.54405355, "Y": 0.34024307, "Type": "eyeRight"},

{"X": 0.424819, "Y": 0.5417343, "Type": "mouthLeft"},

{"X": 0.5342691, "Y": 0.54362005, "Type": "mouthRight"},

{"X": 0.48934412, "Y": 0.43806323, "Type": "nose"},

],

"Pose": {"Pitch": 5.547308, "Roll": 0.85795176, "Yaw": 4.76913},

"Quality": {"Brightness": 57.938313, "Sharpness": 46.0298},

},

"MatchedFaces": [

{

"Similarity": 99.986176,

"Face": {

"BoundingBox": {

"Height": 0.417963,

"Width": 0.406223,

"Left": 0.28826,

"Top": 0.242463,

},

"FaceId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"Confidence": 99.996605,

"ImageId": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"ExternalImageId": "iwasa",

},

}

],

}

],

}

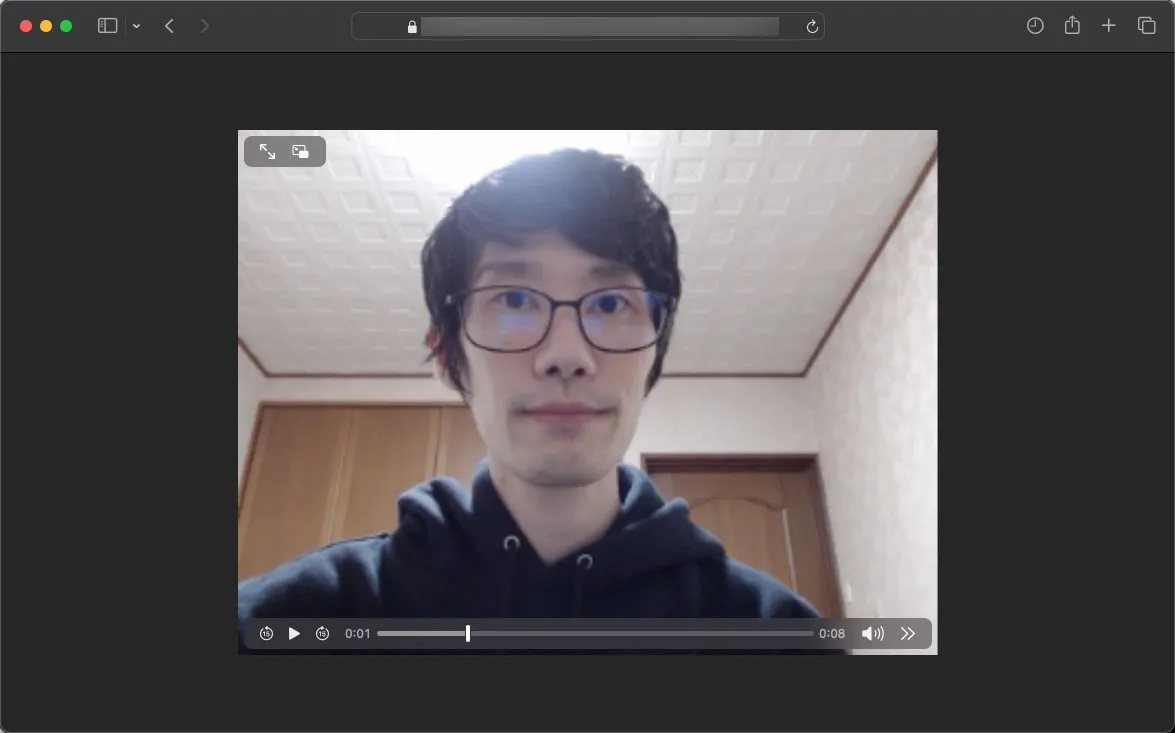

You can also find the HLS url within the log records like the following.

https://x-xxxxxxxx.kinesisvideo.<AWS_REGION>.amazonaws.com/hls/v1/getHLSMasterPlaylist.m3u8?SessionToken=xxxxxxxxxx

To watch the on-demand video, open the url using Safari or Edge.

Cleaning Up

Clean up the provisioned AWS resources with the following command.

aws rekognition stop-stream-processor --name face-detector-rekognition-stream-processor

sam delete

Pricing

Here is an example simulation based on the following condition.

- AWS region

ap-northeast-1will be used. - The USB camera will always stream video data to the Kinesis Video Stream. Its size will be about 1GB per day and stored during one day.

- The Rekognition Video stream processor will always analyze the Kinesis Video Stream.

- The face collection has 10 facial data.

- The Kinesis Data Stream will always provision one shard.

- The Lambda (Arm/128MB) function will be invoked every seconds. Billable duration will be about 100ms.

- Users will watch on-demand video using HLS urls one hour in one day.

Kinesis Video Streams

| Item | Price |

|---|---|

| Data Ingested into Kinesis Video Streams (per GB data ingested) | $0.01097 |

| Data Consumed from Kinesis Video Streams (per GB data egressed) | $0.01097 |

| Data Consumed from Kinesis Video Streams using HLS (per GB data egressed) | $0.01536 |

| Data Stored in Kinesis Video Streams (per GB-Month data stored) | $0.025 |

Simulation

| Item | Expression | Price |

|---|---|---|

| Data Ingested | 1 GB * 31 days * $0.01097 | $0.34007 |

| Data Consumed | 1 GB * 31 days * $0.01097 | $0.34007 |

| Data Consumed using HLS | 1 hour * 31 days * $0.01536 | $0.47616 |

| Data Stored | 1 GB * 31 days * $0.025 | $0.775 |

| Total | - | $1.9 |

Rekognition Video

| Item | Price |

|---|---|

| Face Vector Storage | $0.000013/face metadata per month |

| Face Search | $0.15/min |

Simulation

| Item | Expression | Price |

|---|---|---|

| Face Vector Storage | 10 faces * $0.000013 | $0.00013 |

| Face Search | 60 minutes * 24 hours * 31 days * $0.15 | $6,696 |

| Total | - | $6,696 |

Kinesis Data Streams

| Item | Price |

|---|---|

| Shard Hour (1MB/second ingress, 2MB/second egress) | $0.0195 |

| PUT Payload Units, per 1,000,000 units | $0.0215 |

Simulation

| Item | Expression | Price |

|---|---|---|

| Shard Hour | 1 shard * 24 hours * 31 days * $0.0195 | $14.508 |

| PUT Payload Units | 1 unit * 60 seconds * 60 minutes * 24 hours * 31 days * 0.0215 / 1000000 | $0.0575856 |

| Total | - | $14.6 |

Lambda

| Item | Price |

|---|---|

| Price per 1ms (128MB) | $0.0000000017 |

Simulation

| Item | Expression | Price |

|---|---|---|

| Price per 1ms | 100ms * 60 seconds * 60 minutes * 24 hours * 31 days * $0.0000000017 | $0.455328 |

| Total | - | $0.5 |